CSE authors present six papers at MICRO 2021

Six papers co-authored by 12 CSE researchers were presented at the 2021 IEEE/ACM International Symposium on Microarchitecture (MICRO), the premier research forum for new microarchitecture techniques that advance computing and communication systems. This is the strongest showing U-M has had at MICRO in a number of years, with eight total papers presented from the whole of the University of Michigan.

One submission from CSE co-authored by PhD student Harini Muthukrishnan and Adjunct Prof. Thomas Wenisch was also nominated for the conference’s Best Paper Award, and Prof. Yatin Manerkar chaired the event’s session on parallelism.

The six papers covered computing on encrypted data, multi-GPU memory management, reducing frontend data center stalls, runtime control for reconfigurable hardware accelerators, an accelerator for portable virus detection, and a new method for prefetching instructions in data centers. Learn more about these projects:

F1: A Fast and Programmable Accelerator for Fully Homomorphic Encryption

Nikola Samardzic, Axel Feldmann, Aleksandar Krastev, Srinivas Devadas (MIT); Ronald Dreslinski, Christopher Peikert (University of Michigan); Daniel Sanchez (MIT)

Abstract: Fully Homomorphic Encryption (FHE) allows computing on encrypted data, enabling secure offloading of computation to untrusted serves. Though it provides ideal security, FHE is expensive when executed in software, 4 to 5 orders of magnitude slower than computing on unencrypted data. These overheads are a major barrier to FHE’s widespread adoption. We present F1, the first FHE accelerator that is programmable, i.e., capable of executing full FHE programs. F1 builds on an in-depth architectural analysis of the characteristics of FHE computations that reveals acceleration opportunities. F1 is a wide-vector processor with novel functional units deeply specialized to FHE primitives, such as modular arithmetic, number-theoretic transforms, and structured permutations. This organization provides so much compute throughput that data movement becomes the bottleneck. Thus, F1 is primarily designed to minimize data movement. The F1 hardware provides an explicitly managed memory hierarchy and mechanisms to decouple data movement from execution. A novel compiler leverages these mechanisms to maximize reuse and schedule off-chip and on-chip data movement. We evaluate F1 using cycle-accurate simulations and RTL synthesis. F1 is the first system to accelerate complete FHE programs and outperforms state-of-the-art software implementations by gmean 5400x and by up to 17000x. These speedups counter most of FHE’s overheads and enable new applications, like real-time private deep learning in the cloud.

GPS: A Global Publish Subscribe Model for Multi-GPU Memory Management

Best Paper Nominee

Harini Muthukrishnan (University of Michigan); Daniel Lustig, David Nellans (NVIDIA); Thomas Wenisch (University of Michigan)

Abstract: Suboptimal management of memory and bandwidth is one of the primary causes of low performance on systems comprising multiple GPUs. Existing memory management solutions like Unified Memory (UM) offer simplified programming but come at the cost of performance: applications can even exhibit slowdown with increasing GPU count due to their inability to leverage system resources effectively. To solve this challenge, we propose GPS, a HW/SW multi-GPU memory management technique that efficiently orchestrates inter-GPU communication using proactive data transfers. GPS offers the programmability advantage of multi-GPU shared memory with the performance of GPU-local memory. To enable this, GPS automatically tracks the data accesses performed by each GPU, maintains duplicate physical replicas of shared regions in each GPU’s local memory, and pushes updates to the replicas in all consumer GPUs. GPS is compatible within the existing NVIDIA GPU memory consistency model but takes full advantage of its relaxed nature to deliver high performance. We evaluate GPS in the context of a 4-GPU system with varying interconnects and show that GPS achieves an average speedup of 3.0× relative to the performance of a single GPU, outperforming the next best available multi-GPU memory management technique by 2.3× on average. In a 16-GPU system, using a future PCIe 6.0 interconnect, we demonstrate a 7.9× average strong scaling speedup over single-GPU performance, capturing 80% of the available opportunity.

PDede: Partitioned, Deduplicated, Delta Branch Target Buffer

Niranjan K Soundararajan (Intel Labs); Peter Braun (University of California, Santa Cruz); Tanvir Ahmed Khan, Baris Kasikci (University of Michigan); Heiner Litz (University of California, Santa Cruz); Sreenivas Subramoney (Intel Labs)

Abstract: Due to large instruction footprints, contemporary data center applications suffer from frequent frontend stalls. Despite being a significant contributor to these stalls, the Branch Target Buffer (BTB) has received less attention compared to other frontend structures such as the instruction cache. While prior works have looked at enhancing the BTB through more efficient replacement policies and prefetching policies, a thorough analysis into optimizing the BTB’s storage efficiency is missing. In this work, we analyze BTB accesses for a large number (100+) of frontend bound applications to understand their branch target characteristics. This analysis, provides three significant observations about the nature of branch targets: (1) a significant number of branch instructions have the same branch target, (2) a significant number of branch targets share the same page address, and (3) a significant percentage of branch instructions and their targets are located on the same page. Furthermore, we observe that while applications’ address spaces are sparsely populated, they exhibit spatial locality within and across pages. We refer to these multi-page addresses as regions and we show that applications traverse a significantly smaller number of regions than pages. Based on these insights, we propose PDede, an efficient re-design of the BTB micro-architecture that improves storage efficiency by removing redundancy among branches and their targets. PDede introduces three techniques, (a) BTB Partitioning, (b) Branch Target Deduplication, and (c) Delta Branch Target Encoding to reduce BTB miss induced frontend stalls. We evaluate PDede across 100+ applications, spanning several usage scenarios, and show that it provides an average 14.4% (up to 76%) IPC speedup by reducing BTB misses by 54.7% on average (and up to 99.8%).

SparseAdapt: Runtime Control for Sparse Linear Algebra on a Reconfigurable Accelerator

Subhankar Pal, Aporva Amarnath, Siying Feng (University of Michigan); Michael O’Boyle (University of Edinburgh); Ronald Dreslinski (University of Michigan); Christophe Dubach (McGill University)

Abstract: Dynamic adaptation is a post-silicon optimization technique that adapts the hardware to workload phases. However, current adaptive approaches are oblivious to implicit phases that arise from operating on irregular data, such as sparse linear algebra operations. Implicit phases are short-lived and do not exhibit consistent behavior throughout execution. This calls for a high-accuracy, low overhead runtime mechanism for adaptation at a fine granularity. Moreover, adopting such techniques for reconfigurable manycore hardware, such as coarse-grained reconfigurable architectures (CGRAs), adds complexity due to synchronization and resource contention.

We propose a lightweight machine learning-based adaptive framework called SparseAdapt. It enables low-overhead control of configuration parameters to tailor the hardware to both implicit (datadriven) and explicit (code-driven) phase changes. SparseAdapt is implemented within the runtime of a recently-proposed CGRA called Transmuter, which has been shown to deliver high performance for irregular sparse operations. SparseAdapt can adapt configuration parameters such as resource sharing, cache capacities, prefetcher aggressiveness, and dynamic voltage-frequency scaling (DVFS). Moreover, it can operate under the constraints of either (i) high energy-efficiency (maximal GFLOPS/W), or (ii) high power performance (maximal GFLOPS3 /W).

We evaluate SparseAdapt with sparse matrix-matrix and matrixvector multiplication (SpMSpM and SpMSpV) routines across a suite of uniform random, power-law and real-world matrices, in addition to end-to-end evaluation on two graph algorithms. SparseAdapt achieves similar performance on SpMSpM as the largest static configuration, with 5.3× better energy-efficiency. Furthermore, on both performance and efficiency, SparseAdapt is at most within 13% of an Oracle that adapts the configuration of each phase with global knowledge of the entire program execution. Finally, SparseAdapt is able to outperform the state-of-the-art approach for runtime reconfiguration by up to 2.9× in terms of energy-efficiency

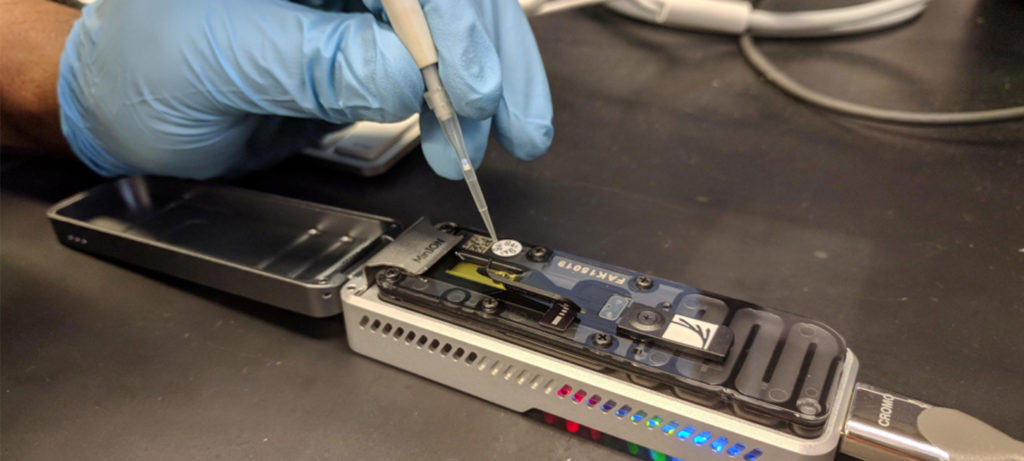

SquiggleFilter: An Accelerator for Portable Virus Detection

Tim Dunn, Harisankar Sadasivan, Jack Wadden, Kush Goliya, Kuan-Yu Chen, David Blaauw, Reetuparna Das, Satish Narayanasamy (University of Michigan)

Abstract: The MinION is a recent-to-market handheld nanopore sequencer. It can be used to determine the whole genome of a target virus in a biological sample. Its Read Until feature allows us to skip sequencing a majority of non-target reads (DNA/RNA fragments), which constitutes more than 99% of all reads in a typical sample. However, it does not have any on-board computing, which significantly limits its portability.

We analyze the performance of a Read Until metagenomic pipeline for detecting target viruses and identifying strain-specific mutations. We find new sources of performance bottlenecks (basecaller in classification of a read) that are not addressed by past genomics accelerators.

We present SquiggleFilter, a novel hardware accelerated dynamic time warping (DTW) based filter that directly analyzes MinION’s raw squiggles and filters everything except target viral reads, thereby avoiding the expensive basecalling step.

We show that our 14.3W 13.25mm2 accelerator has 274x greater throughput and 3481X lower latency than existing edge GPU-based solutions while consuming half the power. It can meet the expected throughput of the next generation of nanopore sequencers. Its low latency operation enables Nanopore’s Read Until optimization to reduce sequencing cost and time by 38%.

Twig: Profile-Guided BTB Prefetching for Data Center Applications

Tanvir Ahmed Khan, Nathan Brown, Akshitha Sriraman (University of Michigan); Niranjan K Soundararajan (Intel Labs); Rakesh Kumar (Norwegian University of Science and Technology); Joseph Devietti (University of Pennsylvania); Sreenivas Subramoney (Intel Labs); Gilles A Pokam (Intel); Heiner Litz (University of California, Santa Cruz); Baris Kasikci (University of Michigan)

Abstract: Modern data center applications have deep software stacks, with instruction footprints that are orders of magnitude larger than typical instruction cache (I-cache) sizes. To efficiently prefetch instructions into the I-cache despite large application footprints, modern server-class processors implement a decoupled frontend with Fetch Directed Instruction Prefetching (FDIP). In this work, we first characterize the limitations of a decoupled frontend processor with FDIP and find that FDIP suffers from significant Branch Target Buffer (BTB) misses. We also find that existing techniques (e.g., stream prefetchers and predecoders) are unable to mitigate these misses, as they rely on an incomplete understanding of a program’s branching behavior.

To address the shortcomings of existing BTB prefetching techniques, we propose Twig, a novel profile-guided BTB prefetching mechanism. Twig analyzes a production binary’s execution profile to identify critical BTB misses and inject BTB prefetch instructions into code. Additionally, Twig coalesces multiple non-contiguous BTB prefetches to improve the BTB’s locality. Twig exposes these techniques via new BTB prefetch instructions. Since Twig prefetches BTB entries without modifying the underlying BTB organization, it is easy to adopt in modern processors. We study Twig’s behavior across nine widely-used data center applications, and demonstrate that it achieves an average 20.86% (up to 145%) performance speedup over a baseline 8K-entry BTB, outperforming the state-of-the-art BTB prefetch mechanism by 19.82% (on average).

MENU

MENU