Michigan team competes in Amazon challenge to make AI more engaging

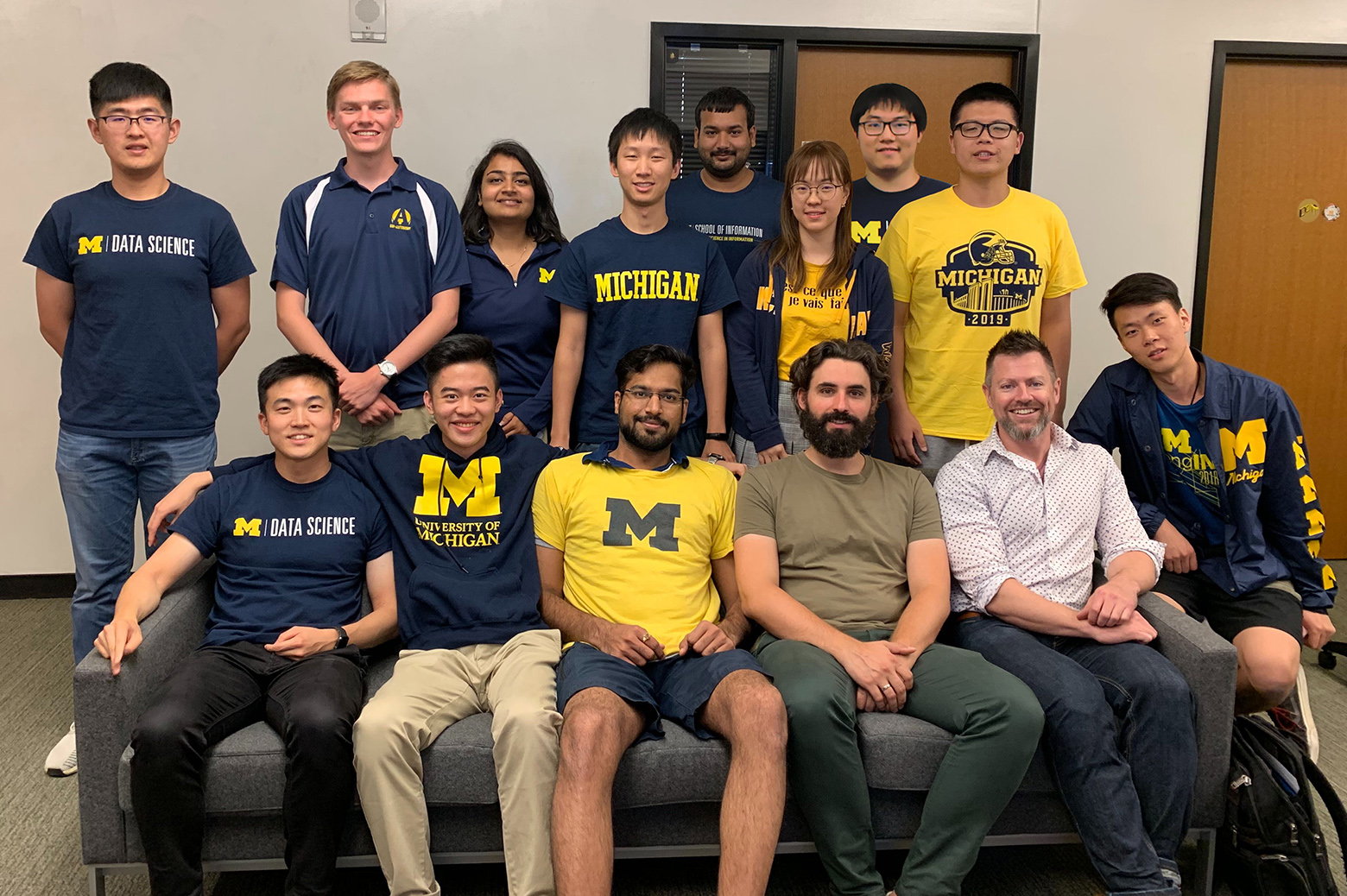

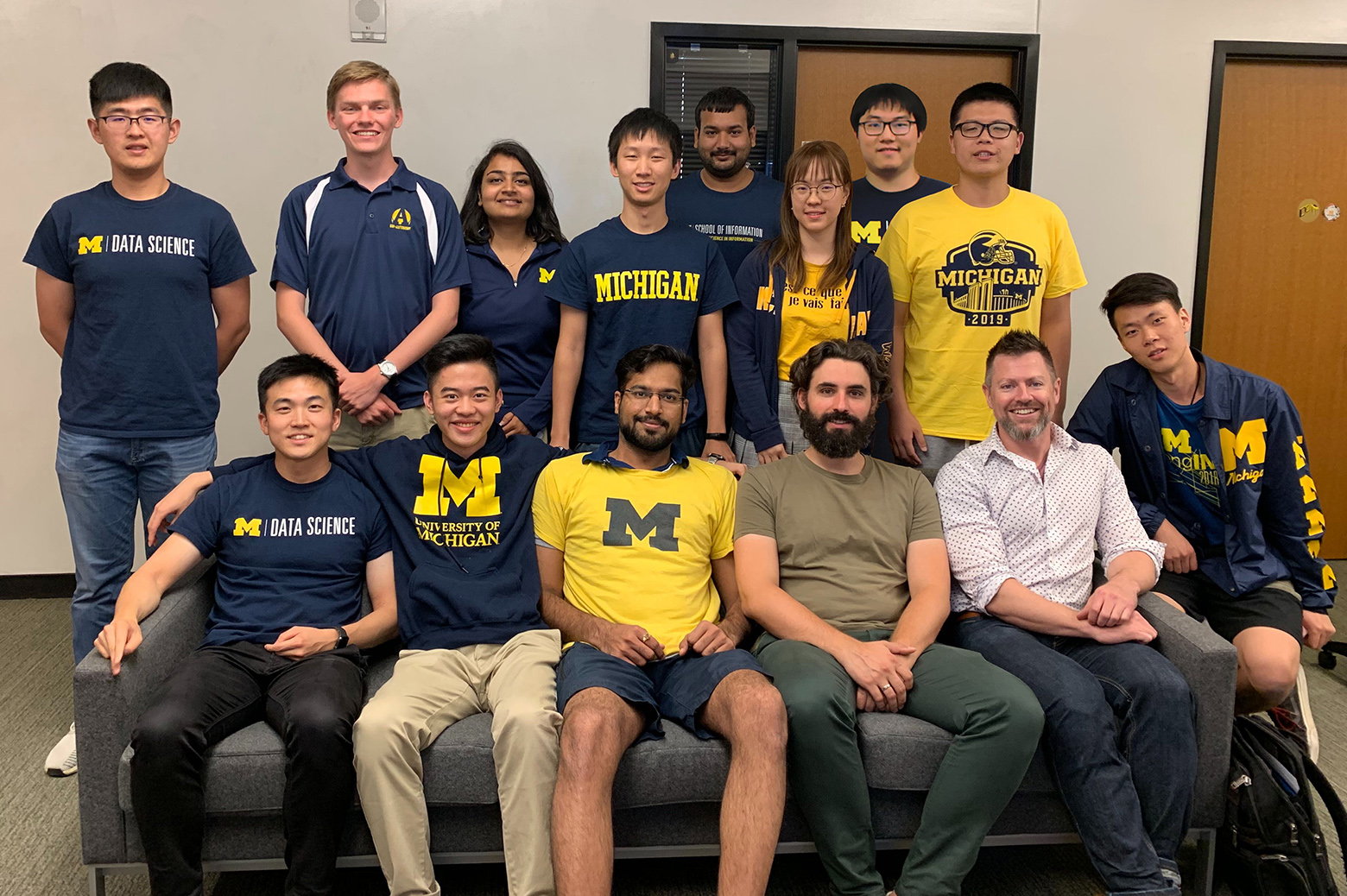

The team of twelve students is one of ten worldwide working to give Amazon’s Alexa more human-like conversational skills.

Enlarge

Enlarge

Artificial intelligence that can hold a “real” conversation remains a sci-fi standard of future technology – all the chatty synthetic personalities that answer questions on our smartphones and smart home speakers haven’t really given us the Her or 2001 experience yet.

Enter the Alexa Prize Socialbot Grand Challenge. In an effort to push the boundaries of conversational AI, Amazon has opened up their Alexa virtual assistant platform to ten university teams in an effort to make the bot a little more human.

Among the ten schools from around the world selected to compete is a team from U-M co-advised by Prof. David Jurgens from the School of Information and Prof. Nikola Banovic. The team of 12 graduate and undergraduate students from the School of Information and CSE will be part of Amazon’s broad mission to make its voice assistant smarter and more conversational so that it can be more useful and engaging for its users.

Now in its third year, the Grand Challenge is truly grand – the competing teams are working to build a bot able to hold a conversation for more than 20 minutes. To complete this task, the teams began work in September 2019 with a prize of $250,000 and will have until February 2020 to qualify for the quarterfinals. While they are currently in beta testing, beginning in December each team’s socialbot will be integrated into Alexa’s “Let’s Chat” feature and deployed live for real users to interact with.

Throughout the remainder of the competition, one of the ten versions of the Alexa socialbot will be loaded at random whenever users opt to chat. When they finish their conversation, they’ll have the chance to rate the experience out of five. While working on their core idea, the team will be able to respond to this feedback iteratively and shoot for better user interactions throughout the challenge.

The challenge brings together a number of the most difficult problems in AI. Beyond being a chance to improve the Alexa platform, it pushes the students to engage in new, creative research.

Enlarge

Enlarge

“The goal is to extend the reach of conversational AI,” says Chung Hoon Hong, Master’s student in Data Science and team lead. Alexa’s various functions are split across different discrete “skills,” much like the different single-purpose apps on a smartphone. Integrating this diverse functionality, or even just a portion of it, brings about a complicated scenario that’s difficult for the platform to deal with. “Right now if the domain of a conversation is too big then the AI bots have trouble understanding and responding.”

Even other attempts at broader socialbots that can engage in general conversation have found much more success drilling down on a narrow range of topics.

“The success of some of the smart assistants to this point has been in being able to respond to specific commands, to perform specific and somewhat simple tasks,” says Banovic. “But the moment you say ‘Alexa, let’s chat,’ you’re moving away from a command paradigm into a realm where now we are expecting a humanlike conversation.”

These human-like conversations are extremely difficult to engineer. Developers have become quite good at having bots synthesize sentences, but what’s currently lacking, according to Banovic, is the ability to establish common assumptions and the sense of knowing what’s being discussed in a broader context.

Essentially, to be successful, the bot has to be a good listener.

“Nobody engages in a conversation with another person and says afterwards ‘this person was an amazing talker,’” Banovic says. “They usually say they were an amazing listener, and that comes from asking the right kind of questions and showing interest.”

U-M’s bot, called Audrey, will be focused on this type of more general conversation and understanding.

“Our idea is to move away from topical chats and work on the casual conversation itself,” says Hong. “We’re working on active listening, a ‘heartwarming’ social bot. We want our Audrey to be able to tell personalized stories.”

Solving these problems could be what separates a successful interaction with a virtual assistant from a lost user. When you pick up an assistant out of the box, the first interaction will have to be sufficiently compelling to keep you interested. Alexa, however, knows nothing about you when you start talking for the first time. Much like a human, to speak to you effectively a socialbot would have to learn about you and keep track of what it already knows.

“It’s hard to have a coherent conversation after multiple turns,” Hong says. “It’s very disjointed right now, there’s no continuity.”

One of the team’s big goals is to have the bot understand who the user is. That kind of personal understanding depends on the bot asking the right questions at the right time, and would ultimately improve the flow of conversation.

“These types of interactive agents are only going to become more prevalent in our daily lives,” says Jurgens. “I hope the students come away not only with the skills to make these agents more natural and empathetic, but also with an appreciation for how to use these agents to help others.”

U-M’s first attempt at this challenge started as the project of three first-year CS students in Winter semester 2019. William Chen, Ryan Draves, and Zhizhuo Zhou had some experience designing Alexa skills before, and began recruiting grad student and faculty researchers in the area of natural language processing to advise or work with the team.

Working on recruitment with Hong, the final team came to include Vihang Agarwal (Master’s, CSE), Arushi Jain (Master’s, Information), Yuan Liang (Master’s, Data Science), Yujian Liu (undergraduate, CS), Sagnik Roy (Master’s, Information), Yiting Shen (Master’s, CSE), Yucen Sun (undergraduate, CS), and Junjie Xing (Master’s, CSE).

“The University has a lot of resources,” says Zhou. “Anyone with an idea can reach out, gather a team, and create something awesome.”

The interdisciplinary group has a workflow and day to day operations not dissimilar to a full-fledged industry design team. The team is split across different technical tasks that, together, are necessary to the design of an integrated, working socialbot. These include things like natural language understanding and generation, gathering data from places like Reddit and The Washington Post, and using knowledge graphs to help searches determine the relationships between different topics. Students are assigned to the different tasks that suit their expertise at different phases of development.

For many on the team, the Grand Challenge represents their first real-world product design cycle.

“They’re really going to learn what it means to develop a challenging system that interacts with real people,” says Banovic. “They are now creating a socio-technical system in a way that perhaps they never had an opportunity to. They are creating technologies that are effecting the lives of real people.”

This mode of work, which is a difficult experience to emulate in a college course, faces the students with a number of organizational and design challenges.

“It has reinforced for me that structural product management is very important,” says Chen. “A week into development we already had multiple branches and a dozen code bases. Amazon demands quite a bit of progress in a very short amount of time.”

But the students are motivated and well-equipped to meet the challenge. As they move on to live testing with real Alexa customers on December 4, they’re keen to keep their goals in mind and create a socialbot that stands apart from the crowd.

“We’re not just creating something to beat the rating,” says Hong. “The goal of this competition is to work on research, and work on something that’s not been done before.”