Power-hungry AI: Researchers evaluate energy consumption across models

The tech industry is a major and growing contributor to global energy consumption. Data centers, in particular, are responsible for an estimated 2% of electricity use in the U.S., consuming up to 50 times more energy than an average commercial building, and that number is only trending up as increasingly popular large language models (LLMs) become connected to data centers and eat up huge amounts of data. Based on current datacenter investment trends, LLMs could emit the equivalent of five billion U.S. cross-country flights in one year.

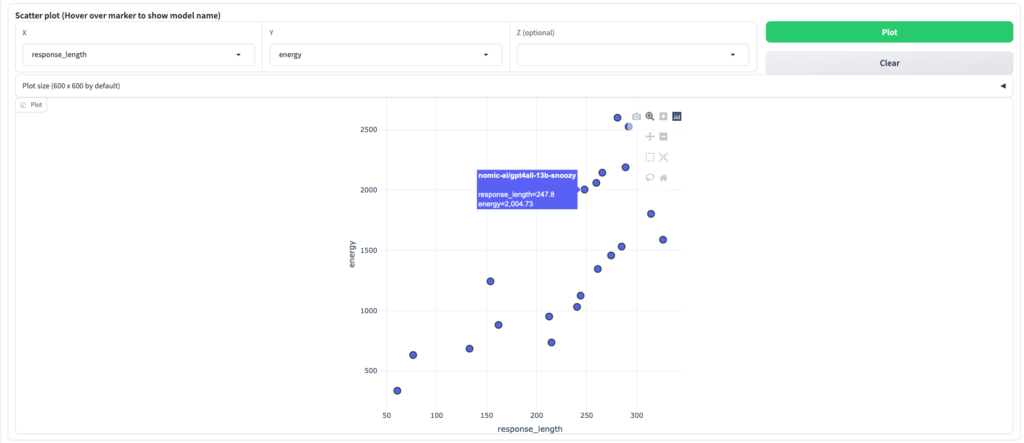

In response to this issue, a team of researchers at the University of Michigan has developed the ML.ENERGY Leaderboard, which evaluates and ranks open-source LLMs according to how much energy they consume. Growing out of the team’s prior development of Zeus, a framework for measuring and optimizing the energy consumption of deep learning models used to power modern AI, the ML.ENERGY Leaderboard aims to supply developers and end users alike with a holistic view of how much energy LLMs consume, allowing developers and users to factor in any given application’s energy demands when deciding to use it.

“Recent years have seen a huge rise in large language models, but the conversation has revolved almost exclusively around performance,” said Jae-Won Chung, doctoral student in computer science and engineering at U-M. “No one is really thinking about energy consumption.”

The information that has emerged surrounding AI’s energy use has been incomplete at best. The ML.ENERGY Leaderboard is the first known initiative to dive deeper into the dynamics influencing energy use among open-source LLMs and identify which models are consuming more or less energy and why.

“This is the first analysis of its kind,” said Mosharaf Chowdhury, Morris Wellman Faculty Development Professor of Computer Science and Engineering at U-M. “The literature that does exist on AI energy consumption is either anecdotal or based on very basic calculations. No one has tried to drill down to the specifics in a systematic and principled way to determine how much energy AI is consuming.”

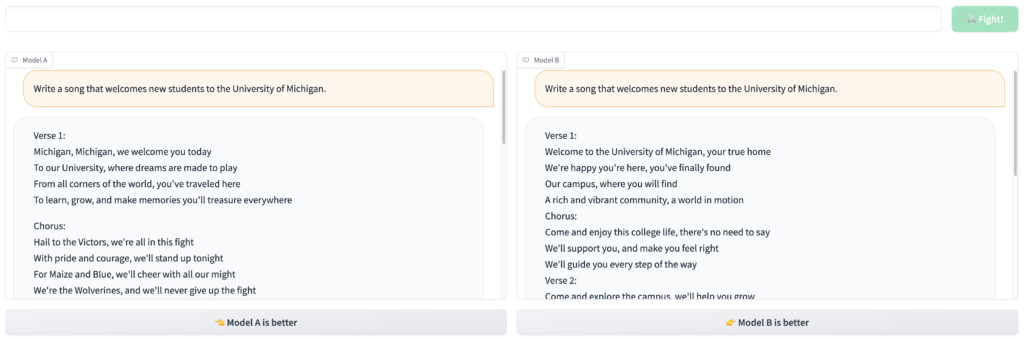

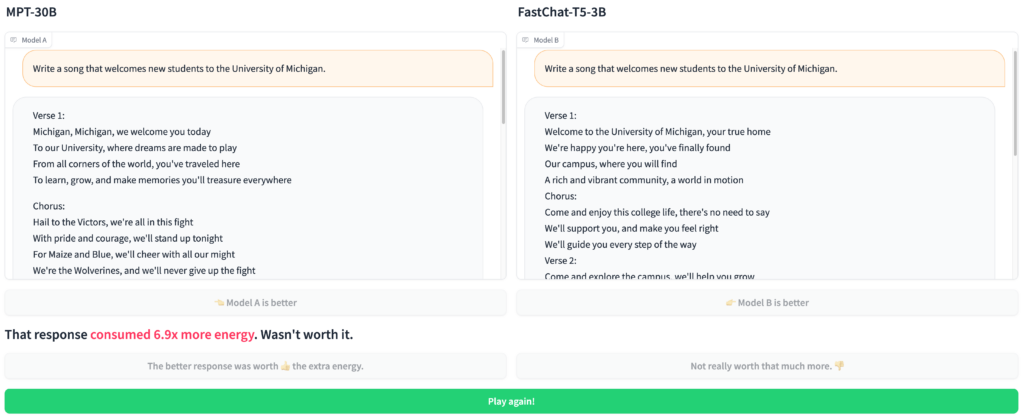

Accompanying the Leaderboard is an interactive, online serving system that gives users a blind, side-by-side comparison of the content generated by two different LLMs in response. After deciding which content they like more, the user is given information about their relative energy consumption and then has the opportunity to revise their choice. The team hopes that the tool will serve as a guide for those seeking to balance performance and energy consumption in their choice of LLMs, ultimately promoting the use of more energy efficient models, while also providing information on how users choose LLM models.

“The objective of the serving system is to learn about how people perceive large language models,” said Jiachen (Amber) Liu, doctoral student in computer science and engineering at U-M. “We want to parse how users balance response quality and overall performance with energy considerations, and how much that affects their choice.”

The information gleaned from the Leaderboard is useful to multiple stakeholders, whether it is the developer creating or modifying the model, the civil engineer designing and building the data center building, or the end user. Considering the tremendous energy burden data centers face, any increase in energy efficiency translates into significant cost savings. This trickles down to everyday people as well.

“This initiative goes far, far beyond computer science,” said Chowdhury. “We not only want to collect the numbers but also build the tools so that anyone can use and incorporate this software with their existing systems.”

The ML.ENERGY Leaderboard is part of a broader initiative spearheaded by the SymbioticLab at U-M. In addition to Chowdhury, Chung, and Liu, the Leaderboard team also includes Zhiyu (Julius) Wu and Ding (Eric) Ding, undergraduate students in computer science at U-M.