Student team brings augmented reality to the operating room

With the help of a VR headset, three students helped a doctor stay focused in the operating room.

Enlarge

Enlarge

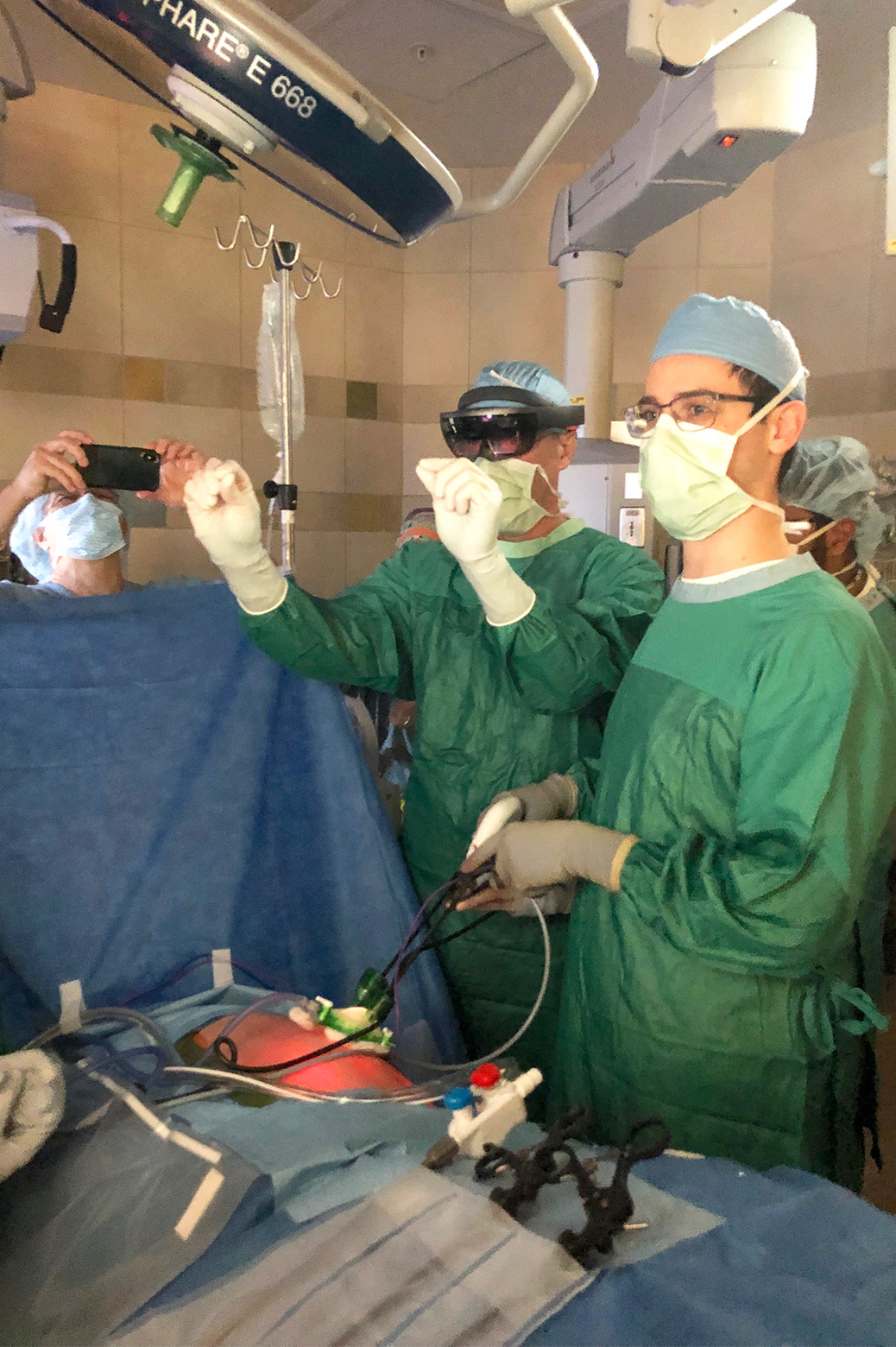

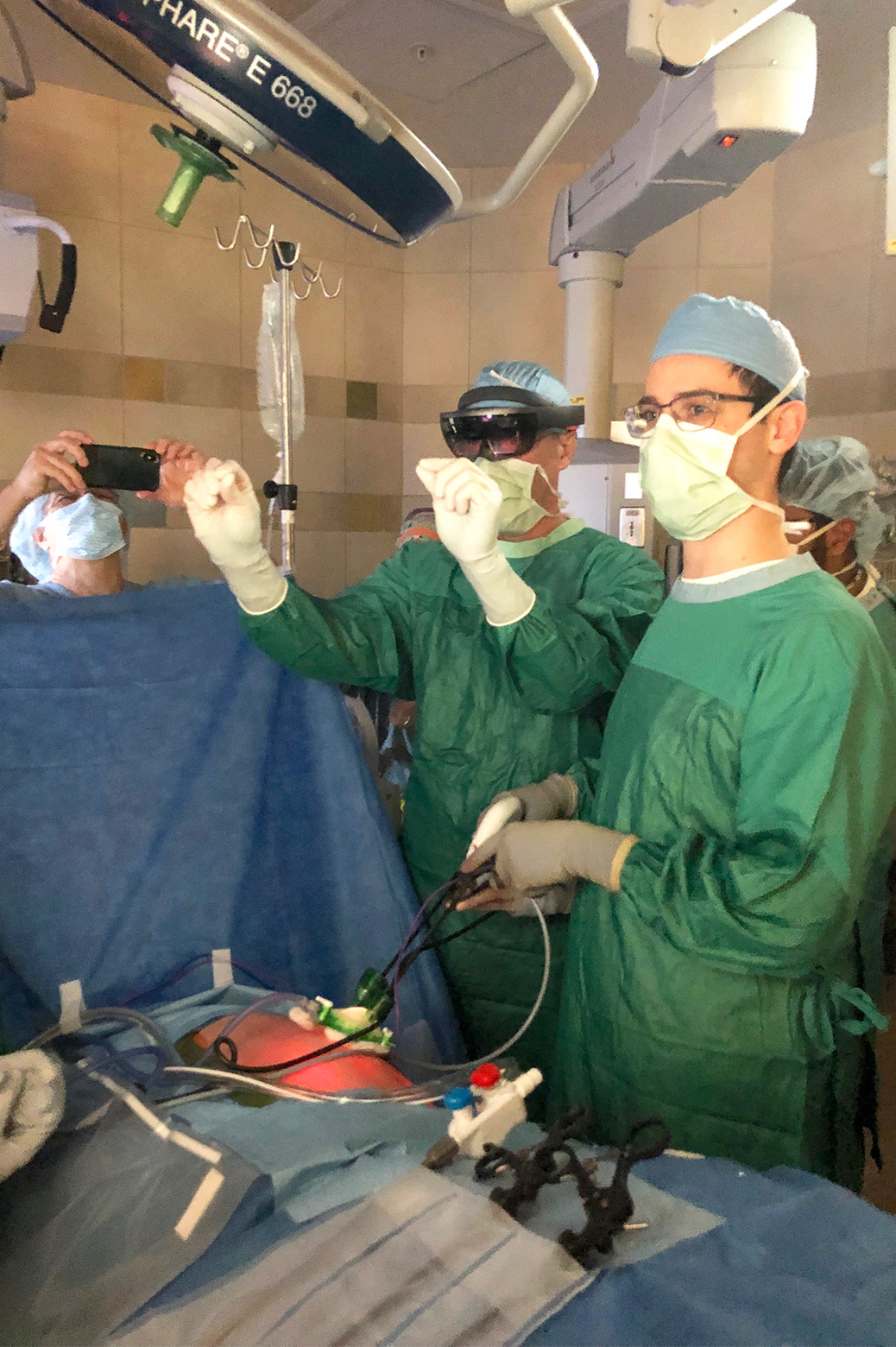

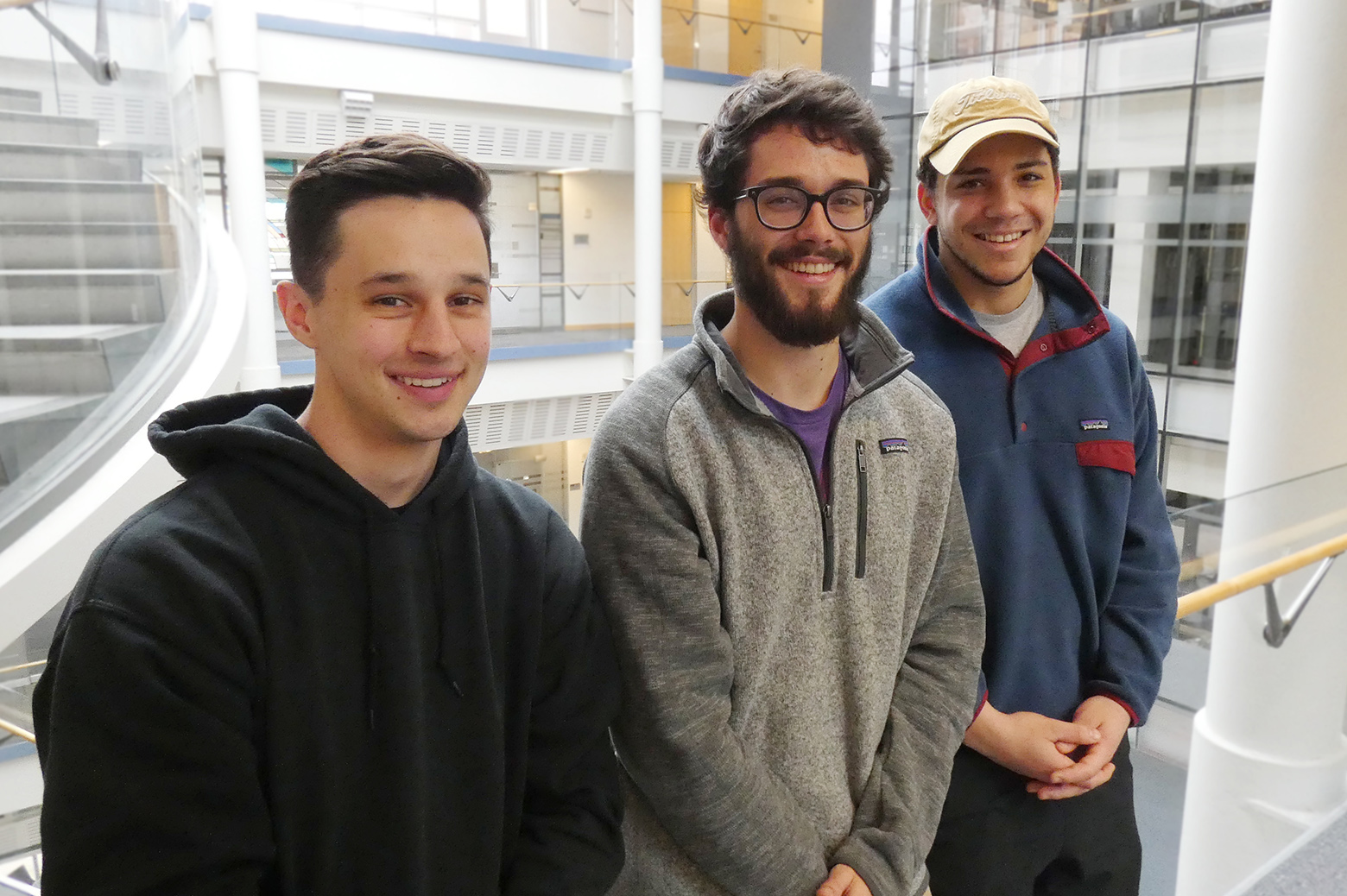

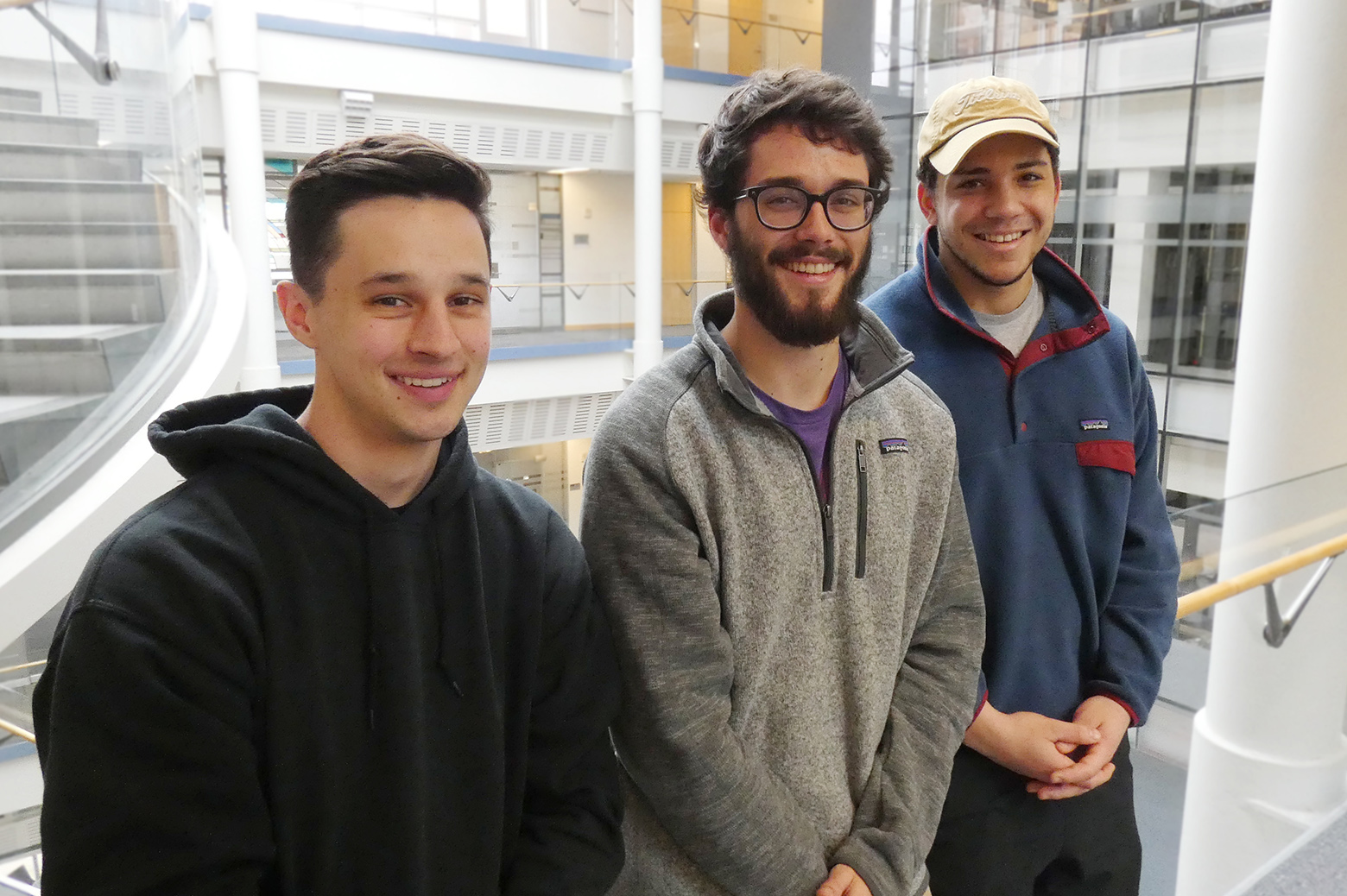

Three CS students and alumni have launched operating room monitors and screens into the virtual world of augmented reality. Mitchell Bigland (BS CS 2019), Nicholas Keuning (BS CS 2018), and Chase Austin (BS CS expected Fall 2019), working with Dr. David Chesney and Dr. Marcus Jarboe of Pediatric Surgery, have developed an app for the Microsoft Hololens to stream a video feed of a patient’s internals directly to the surgeon’s field of vision, helping them keep their eyes on their tools.

Keeping surgeries minimally invasive has many benefits for a patient – smaller scars, less time in the operating room, faster recovery, and fewer pre-operation jitters among them. A procedure called laparoscopy has become a powerful way for doctors to stick to smaller cuts in a number of common surgeries.

The procedure takes its name from the laparoscope, a slim video camera and light that can fit into small incisions and give surgeons an internal view of the patient. When inserted, doctors watch a video monitor in the operating room to see what’s happening. Without those tools, they’d have to make a much larger opening.

But the use of monitors poses some challenges to surgeons and nurses. While keeping procedures more comfortable for patients, it leaves surgeons multitasking and looking from the monitor back to the tools as they maneuver with the camera. On top of that, the screens tend to clutter the operating room and can be difficult to position in the doctor’s field of view.

“The doctors told us that it can be hard to see,” Keuning explains. “At times they require assistance from nurses to point things out on the screen.”

So the team took the feed from the surgeon’s laparascope and streamed it with a Hololens, where it’s always in the user’s frame of view and easy to reposition or resize with gestures and voice control.

Enlarge

Enlarge

“That will maximize the amount of time they can spend actually looking at the patient,” says Bigland, “and, we believe, improve how well they can see the actual video.”

The app’s other main feature is a scan gallery that gives doctors the ability to load images of previous diagnostic scans, such as CAT scans or MRIs, and arrange them alongside the video stream. Users can manipulate the position and size of the scans, as well as alter their brightness and contrast in real time.

The project originated in Fall 2018 in Chesney’s EECS 495 course, Software Development for Accessibility, which connects students with hospital clients looking for software solutions to particular issues in their field. It was there that the team met Jarboe, all submitting similar proposals after he described his difficulties in the operating room to the class. The trio opted to continue development with him in the Winter semester, ultimately bringing a fully functioning prototype to a live procedure in May.

“Dr. Chesney was very good at bringing together enough pieces that you could then turn it into a real opportunity for a concrete project,” says Keuning.

The team had planned to have a functioning proof of concept by the end of the year, but the actual trial run in the operating room took them by surprise.

“It kind of came out of the blue,” says Bigland. At that time the app had been up and running with webcam streams, so this opportunity put the heat on to test a finished version with standalone cameras. It was Jarboe who suggested the live trial, underlining his enthusiasm for the idea that had encouraged the team throughout development.

“Our ability to work with actual doctors at the hospital has really helped us in determining what’s useful,” says Bigland. “We’d go visit Dr. Jarboe while he had other physicians stopping in and he would show them the Hololens and our app.”

“That access to their expertise and off the cuff suggestions was super helpful,” says Keuning.

Enlarge

Enlarge

This project was the first medical application for the students on the team, all of whom studied in the College of LSA. The trio didn’t initially plan to pursue CS when they came to U-M, but were inspired to make the jump after taking EECS 183, Elementary Programming Concepts, which is taught by Dr. Bil Arthur. The project gave them experience with new technology and programming tools, as well as the full client-focused development pipeline.

“It was a good opportunity to learn those things while creating something that was cool and could actually be impactful in the operating room,” says Austin.

Austin will be graduating after the Fall 2019 semester, Keuning is starting at a software consultancy in Grand Rapids this summer, and Bigland is moving to Seattle to work for Amazon.