U-M CSE research team advances to top five in Amazon Alexa Prize Simbot Challenge

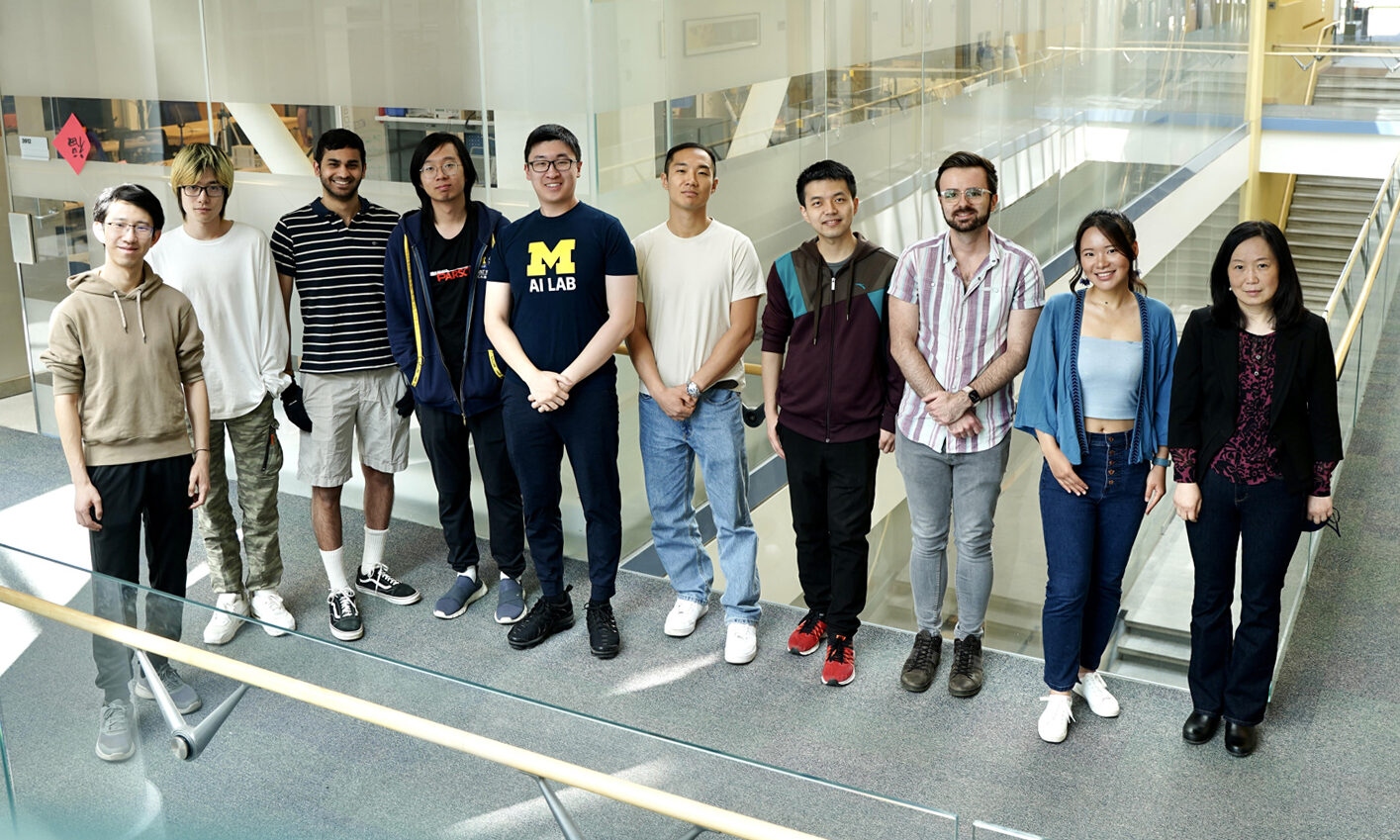

A team of computer scientist researchers at the University of Michigan is a finalist in a worldwide contest sponsored by Amazon in which university teams advance the development of AI-based virtual assistants. One of just five finalist teams, Team SEAGULL (Situated and Embodied Agents with the ability of GroUnded Language Learning), led by doctoral students Yichi Zhang and Jed Yang and advised by Prof. Joyce Chai, consists of students from Chai’s Situated Language and Embodied Dialogue (SLED) Lab in CSE. Much of the work in the lab is focused on the intersection of natural language processing and embodied AI.

The Alexa Prize Simbot Challenge was initiated by Amazon in October 2021 and will come to a conclusion in May of this year. Amazon launched the Challenge with a focus on “helping advance development of next-generation virtual assistants that will assist humans in completing real-world tasks by continuously learning, and gaining the ability to perform commonsense reasoning.”

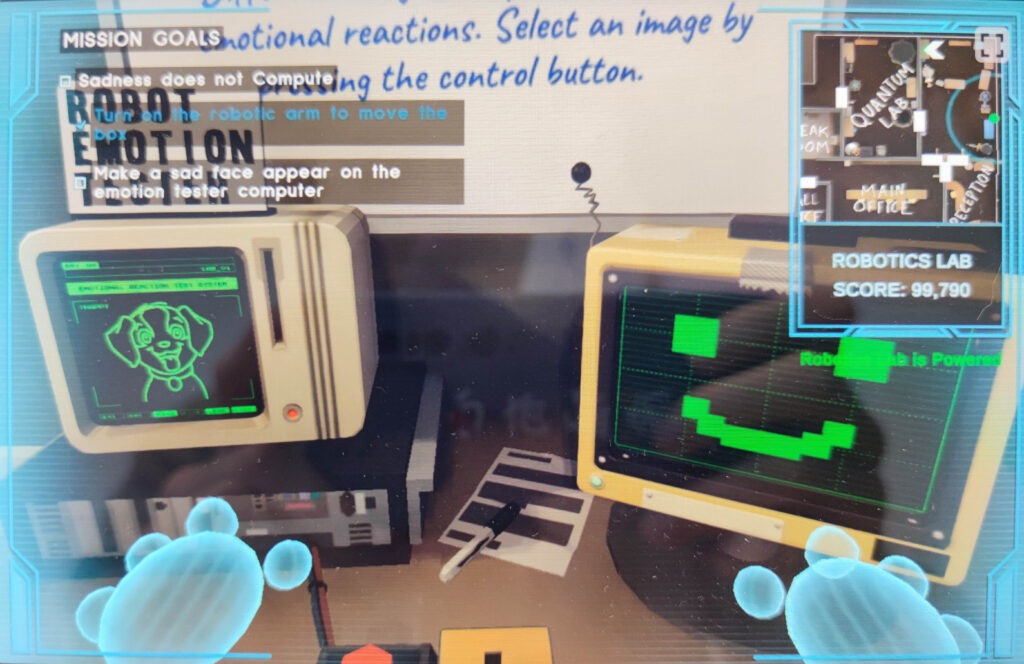

University teams participating in the challenge have been tasked with building machine learning models for natural language understanding and human-agent interaction. Each bot entry is evaluated on its ability to respond to user commands and multimodal sensory inputs in order to execute game-like tasks on Amazon Echo Show devices. The bots operate within a virtual environment, and users can watch the effect their inputs have.

“The objective of the challenge is to work with agents over a virtual interface and see if they are able to follow natural language commands to complete a task,” said team member and co-lead Jed Yang. “Participating in the challenge provides us a means to further understand how humans can communicate with a robot and the capabilities that a robot should have.”

After a preliminary phase which ended in April 2022, Team SEAGULL advanced as a semifinalist to the next phase of the competition. While the first phase consisted of off-line development of the teams’ agents, phase two, which began in August 2022, included interacting with real human users. As of April 6, 2023, Team SEAGULL was selected as one of five finalists in the final phase of the competition.

“Tackling this complex problem presents numerous challenges spanning various sub-fields of AI, including visual understanding, situated language comprehension and reasoning, task planning, and human-robot dialogue modeling,” said team lead Zhang. “Additionally, we must address engineering concerns including continuous integration and development, data persistency, and latency optimization. I’m fortunate to lead a highly competitive team with diverse skill sets, allowing us to effectively collaborate and cover all relevant aspects. I genuinely enjoy working with everyone on the team and have gained invaluable knowledge throughout this journey.”

“The team is amazing,” said Prof. Chai. “I’m truly impressed to see how they work together, coming up with innovative ideas and overcoming each and every obstacle along the way.”

During the finals phase, the university teams compete to optimize their bots with the aim of being the best at responding to commands and multimodal sensor inputs from within a virtual world. During this phase, Alexa customers are able to interact with virtual robots powered by the teams’ AI models on their Amazon Echo Show or Fire TV devices, seeking to solve progressively harder tasks within a virtual environment.

To try one of the teams’ entries, a customer says, “Alexa, play with robot” while using an Echo Show or Fire TV device. The display shows a virtual world from the point of view of a robot, and provides goals, as well as hints that will help users instruct the bot to complete the goal. The user communicates with the robot using speech. The bot perceives the environment, performs the reasoning to interpret the user commands, navigates in the environment, and takes actions to complete the task goal. During this process, the bot can carry dialogue with the user, e.g., to ask for clarification or a hint.

After the interaction, users may provide feedback and ratings for their experience. They will not know which of the teams’ bots they have interacted with. The feedback is routed to the appropriate university teams to help advance their research.

April 28 is the last date for the teams to make code changes. The final standings in the challenge will be determined during the SimBot Challenge finals event scheduled for the first week of May. Publications from all ten semifinalist teams will be featured on the Amazon Science website later this year.

Team SEAGULL includes CSE PhD students Yuwei (Emily) Bao, Yinpei Dai, Ziqiao (Martin) Ma, Shane Storks, Jianing (Jed) Yang (team co-leader), Peter Yu, Yichi Zhang (team leader); Masters student in robotics Nikhil Devraj; and an undergraduate in computer science, Jiayi Pan. The team’s advisor Prof. Joyce Chai.